This tutorial will show you how to write an AudioBuffer from the Web Audio API to a WAV audio file. You can find the project on GitHub here. If you’re not sure how to get an AudioBuffer from an audio file, check out my previous blog post about how to process an uploaded file with the Web Audio API. If you already have an AudioBuffer, you can skip to rendering it as a WAV file.

Getting the AudioBuffer

The AudioBuffer could come from audio recorded clientside, uploaded clientside, or from the server. The tricky part is rendering it back to an audio file. I’ll quickly summarize the below JavaScript code we’ll be starting with to get an AudioBuffer.

- The user uploads an audio file using the file input and clicks a button with id ‘compress_btn’ to start the process.

- We create a FileReader to read the file to an ArrayBuffer.

- We pass the ArrayBuffer into AudioContext.decodeAudioData(), receive an AudioBuffer as ‘buffer’, and process the audio.

var fileInput = document.getElementById('audio-file');

var audioCtx = new (AudioContext || webkitAudioContext)();

var compress_btn = document.getElementById('compress_btn');

//Load button listener

compress_btn.addEventListener("click", function() {

// check for file

if(fileInput.files[0] == undefined) {

// Stop the process and tell user they need to upload a file.

return false;

}

var reader1 = new FileReader();

reader1.onload = function(ev) {

// Decode audio

audioCtx.decodeAudioData(ev.target.result).then(function(buffer) {

// Process Audio

});

};

reader1.readAsArrayBuffer(fileInput.files[0]);

}, false);Processing the Audio

We’ll use a compressor as an example of how to process the audio before rendering it as a file. First, in order to render the audio data as an audio file, we need to create an OfflineAudioContext. We need to create this inside the closure of the Promise success function (where it says // Process Audio). Notice that we’re using the duration of the AudioBuffer (buffer.duration) to specify the length in samples of the OfflineAudioContext. 44100 samples per second is the most standard sample rate for audio, but you can change that here if you need to.

// Process Audio

var offlineAudioCtx = new OfflineAudioContext({

numberOfChannels: 2,

length: 44100 * buffer.duration,

sampleRate: 44100,

});Next, we’ll create a buffer source for the OfflineAudioContext. This is the AudioBufferSourceNode of the audio we’ll be processing. Then we’ll set its buffer property to the AudioBuffer.

// Audio Buffer Source

soundSource = offlineAudioCtx.createBufferSource();

soundSource.buffer = buffer;// Create Compressor Node

var compressor = offlineAudioCtx.createDynamicsCompressor();

compressor.threshold.setValueAtTime(-20, offlineAudioCtx.currentTime);

compressor.knee.setValueAtTime(-30, offlineAudioCtx.currentTime);

compressor.ratio.setValueAtTime(5, offlineAudioCtx.currentTime);

compressor.attack.setValueAtTime(.05, offlineAudioCtx.currentTime);

compressor.release.setValueAtTime(.25, offlineAudioCtx.currentTime);// Connect nodes to destination

soundSource.connect(compressor);

compressor.connect(offlineAudioCtx.destination);Next, to render the processed audio to an AudioBuffer, call startRendering() on the OfflineAudioContext and pass the resulting AudioBuffer to the then() function. Then we call the make_download() function which we will define in the next step. We pass in the rendered AudioBuffer and the length in samples of the audio file.

offlineAudioCtx.startRendering().then(function(renderedBuffer) {

make_download(renderedBuffer, offlineAudioCtx.length);

}).catch(function(err) {

// Handle error

});Create Functions to Render Audio Buffer as a Downloadable WAV File

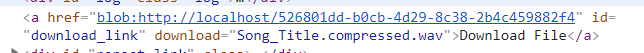

First, we’ll create the make_download() function. This will use the AudioBuffer, pass the AudioBuffer to the bufferToWave() function, and the resulting WAV file will be made available to download via the createObjectURL() function. “download_link” is the id of a simple ‘a’ tag you’ll need to have in your page. You just need to set the ‘href’ attribute to the generated ObjectURL and set the download property to the name of the new file. We’ll also create the generateFileName() function here.

function make_download(abuffer, total_samples) {

// get duration and sample rate

var duration = abuffer.duration,

rate = abuffer.sampleRate,

offset = 0;

var new_file = URL.createObjectURL(bufferToWave(abuffer, total_samples));

var download_link = document.getElementById("download_link");

download_link.href = new_file;

var name = generateFileName();

download_link.download = name;

}

function generateFileName() {

var origin_name = fileInput.files[0].name;

var pos = origin_name.lastIndexOf('.');

var no_ext = origin_name.slice(0, pos);

return no_ext + ".compressed.wav";

}Now we’ll create the bufferToWave() function. This low-level function takes in the AudioBuffer and outputs a WAV file. This is what happens:

- We’ll get the data needed to write the WAV or WAVE header from the AudioBuffer.

- We’ll create a new ArrayBuffer which will contain the new audio ArrayBuffer.

- Then we’ll create a new DataView from the ArrayBuffer to interact with the ArrayBuffer and write the WAVE header and other metadata. The interaction between the DataView and the ArrayBuffer is achieved through the setUint32(), setUint16(), and the setInt16() functions.

- After writing the WAVE header, we write the buffer to the ArrayBuffer sample by sample.

- The finished ArrayBuffer is then passed into the Blob constructor with the WAV audio file type. The function returns this new Blob.

// Convert an AudioBuffer to a Blob using WAVE representation

function bufferToWave(abuffer, len) {

var numOfChan = abuffer.numberOfChannels,

length = len * numOfChan * 2 + 44,

buffer = new ArrayBuffer(length),

view = new DataView(buffer),

channels = [], i, sample,

offset = 0,

pos = 0;

// write WAVE header

setUint32(0x46464952); // "RIFF"

setUint32(length - 8); // file length - 8

setUint32(0x45564157); // "WAVE"

setUint32(0x20746d66); // "fmt " chunk

setUint32(16); // length = 16

setUint16(1); // PCM (uncompressed)

setUint16(numOfChan);

setUint32(abuffer.sampleRate);

setUint32(abuffer.sampleRate * 2 * numOfChan); // avg. bytes/sec

setUint16(numOfChan * 2); // block-align

setUint16(16); // 16-bit (hardcoded in this demo)

setUint32(0x61746164); // "data" - chunk

setUint32(length - pos - 4); // chunk length

// write interleaved data

for(i = 0; i < abuffer.numberOfChannels; i++)

channels.push(abuffer.getChannelData(i));

while(pos < length) {

for(i = 0; i < numOfChan; i++) { // interleave channels

sample = Math.max(-1, Math.min(1, channels[i][offset])); // clamp

sample = (0.5 + sample < 0 ? sample * 32768 : sample * 32767)|0; // scale to 16-bit signed int

view.setInt16(pos, sample, true); // write 16-bit sample

pos += 2;

}

offset++ // next source sample

}

// create Blob

return new Blob([buffer], {type: "audio/wav"});

function setUint16(data) {

view.setUint16(pos, data, true);

pos += 2;

}

function setUint32(data) {

view.setUint32(pos, data, true);

pos += 4;

}

}Notice the line with the comment that reads ‘write 16-bit sample’. This line writes an audio sample to the new ArrayBuffer. Each time the while loop runs, view.setInt16() writes one 16-bit sample for the left channel and then one 16-bit sample for the right channel (This assumes you are working with stereo audio. If it’s mono, it will write one 16-bit sample for the single channel). If you followed along with the last tutorial, you’re compressor.js file should now look something like this:

var fileInput = document.getElementById('audio-file');

var audioCtx = new (AudioContext || webkitAudioContext)();

var compress_btn = document.getElementById('compress_btn');

// Load button listener

compress_btn.addEventListener("click", function() {

// Check for file

if(fileInput.files[0] == undefined) {

// Stop the process and tell user they need to upload a file.

return false;

}

var reader1 = new FileReader();

reader1.onload = function(ev) {

// Decode audio

audioCtx.decodeAudioData(ev.target.result).then(function(buffer) {

// Process Audio

var offlineAudioCtx = new OfflineAudioContext({

numberOfChannels: 2,

length: 44100 * buffer.duration,

sampleRate: 44100,

});

// Audio Buffer Source

soundSource = offlineAudioCtx.createBufferSource();

soundSource.buffer = buffer;

// Create Compressor Node

compressor = offlineAudioCtx.createDynamicsCompressor();

compressor.threshold.setValueAtTime(-20, offlineAudioCtx.currentTime);

compressor.knee.setValueAtTime(30, offlineAudioCtx.currentTime);

compressor.ratio.setValueAtTime(5, offlineAudioCtx.currentTime);

compressor.attack.setValueAtTime(.05, offlineAudioCtx.currentTime);

compressor.release.setValueAtTime(.25, offlineAudioCtx.currentTime);

// Connect nodes to destination

soundSource.connect(compressor);

compressor.connect(offlineAudioCtx.destination);

offlineAudioCtx.startRendering().then(function(renderedBuffer) {

make_download(renderedBuffer, offlineAudioCtx.length);

}).catch(function(err) {

// Handle error

});

});

};

reader1.readAsArrayBuffer(fileInput.files[0]);

}, false);

function make_download(abuffer, total_samples) {

// set sample length and rate

var duration = abuffer.duration,

rate = abuffer.sampleRate,

offset = 0;

// Generate audio file and assign URL

var new_file = URL.createObjectURL(bufferToWave(abuffer, total_samples));

// Make it downloadable

var download_link = document.getElementById("download_link");

download_link.href = new_file;

var name = generateFileName();

download_link.download = name;

}

// Utility to add "compressed" to the uploaded file's name

function generateFileName() {

var origin_name = fileInput.files[0].name;

var pos = origin_name.lastIndexOf('.');

var no_ext = origin_name.slice(0, pos);

return no_ext + ".compressed.wav";

}

// Convert AudioBuffer to a Blob using WAVE representation

function bufferToWave(abuffer, len) {

var numOfChan = abuffer.numberOfChannels,

length = len * numOfChan * 2 + 44,

buffer = new ArrayBuffer(length),

view = new DataView(buffer),

channels = [], i, sample,

offset = 0,

pos = 0;

// write WAVE header

setUint32(0x46464952); // "RIFF"

setUint32(length - 8); // file length - 8

setUint32(0x45564157); // "WAVE"

setUint32(0x20746d66); // "fmt " chunk

setUint32(16); // length = 16

setUint16(1); // PCM (uncompressed)

setUint16(numOfChan);

setUint32(abuffer.sampleRate);

setUint32(abuffer.sampleRate * 2 * numOfChan); // avg. bytes/sec

setUint16(numOfChan * 2); // block-align

setUint16(16); // 16-bit (hardcoded in this demo)

setUint32(0x61746164); // "data" - chunk

setUint32(length - pos - 4); // chunk length

// write interleaved data

for(i = 0; i < abuffer.numberOfChannels; i++)

channels.push(abuffer.getChannelData(i));

while(pos < length) {

for(i = 0; i < numOfChan; i++) { // interleave channels

sample = Math.max(-1, Math.min(1, channels[i][offset])); // clamp

sample = (0.5 + sample < 0 ? sample * 32768 : sample * 32767)|0; // scale to 16-bit signed int

view.setInt16(pos, sample, true); // write 16-bit sample

pos += 2;

}

offset++ // next source sample

}

// create Blob

return new Blob([buffer], {type: "audio/wav"});

function setUint16(data) {

view.setUint16(pos, data, true);

pos += 2;

}

function setUint32(data) {

view.setUint32(pos, data, true);

pos += 4;

}

}After the Blob URL has been generated, the ‘a’ tag should look something like this.

Conclusion

This involves some very low-level tasks for JavaScript, but it’s an excellent introduction to some of the tools JavaScript includes to work with buffers. If you have any questions, you can leave them in the comments below.

I tried this but using a recorded audio blob file URL. I added this answer (couldnt post JS code here on your site) https://stackoverflow.com/questions/15341912/how-to-go-from-blob-to-arraybuffer

However, it leaves the new audio completely silent but it’s the same length as the original. This recorded audio should be the same as uploading a file. Any ideas?

I would need to see your code. I did have the same issue where the output was totally silent when I was developing this example, but I was able to fix it. Can you post a link to your code in CodePen or some other code sharing platform? You can’t post code in the comments for security reasons.

I’m working on a program that will take several tag sound files (short ones) and combine them into a new audio file (via recorder.js). I’m using an online audio context, but the recording depends on the audio files playing one after the other. I want to use an offline audio context to just quickly combine all the audio files into a new wav file. Do yo u know how I can combine separate tag sounds into a new offline context. None of the tutorials I’ve found explain how to do this.

I tried your example with a recorded audio on the browser and I get the downloaded file simply silent. Can you please look at the code and let me know where I am going wrong?

Here is the link to codepen:

https://codepen.io/rk_codepen/pen/abbyVVa

Hi RK, thanks for the comment and codepen. I fixed your code: https://codepen.io/rwgood18/pen/wvvyygd

It will not work in codepen because of the microphone permissions, but it will work on your server. I changed the “resample” function. You need to call the “startRendering” function of the OfflineAudioContext in the scope of a second FileReader.

Cool project!

hey was just curious, in the line where we set the length, I get the multipliers there but was is the last addition of 44 from?

“`

length = len * numOfChan * 2 + 44

“`

ah! just counted it all and now I see that the 44 is the sum of all the 32 and 16 bit integers written in the headers.

Yes! That’s correct. You can see a chart that illustrates it clearly here.

Hi this looks really great! I think it’s exactly what I need. It would be helpful if a completed example could be posted to a github repo.

I added a project to GitHub that uses this code in the context of an audio dynamics compressor: https://github.com/rwgood18/javascript-audio-file-dynamics-compressor

You can clone or download the code and run it in your local environment.

Hello, hope your quarantine is going well! I tried to create a basic example with the code you posted here, but the wav file I get is silent, and I can’t figure out whats wrong for the life of me. It would be much appreciated if you could take a look at my code and see what’s wrong.

https://codepen.io/sloopoo/pen/WNvYRNg

Thanks!!

Hi Alex, thanks for the question. I fixed your code: https://codepen.io/rwgood18/pen/MWwLeOL

It’s not working in the CodePen, but it should work in your local environment.

You missed two main things:

1.) Calling offlineAudioCtx.startRendering() in the context of a second FileReader

2.) Calling soundSource.start(0) after the second FileReader’s readAsArrayBuffer() method is called. After the readAsArrayBuffer() method has read the whole file into an ArrayBuffer, the FileReader’s onload method will be triggered.

In scaling sample from [-1, 1] to a 16-bit, you have this code:

sample = (0.5 + sample < 0 ? sample * 32768 : sample * 32767)|0;

What is the point of the 0.5? I assume that scales [-1, -0.5) by 32768 and [-0.5, 0] by 32767. Is this intended, and if so why?

Hey Jonathan, that’s a really good question. I spent a few minutes looking at the code, and unfortunately I don’t know the answer or have time to dig into it. That chunk of code is mostly copied from here.

this code can only compile one file,

https://codepen.io/rwgood18/pen/MWwLeOL

how can I compile many audio files at once? I’m stuck, please help me

You should be able to change the input to multiple by adding the “multiple” attribute. Then you would loop through the files and call reader1.readAsArrayBuffer(fileInput.files[index]) on each one. I haven’t tried this, but I think that would work.

Thanks for this walkthough, it was immensely helpful! Quick question though, since OfflineAudioContext isn’t supported in Safari, any thoughts on a work-around?

There may be a workaraound depending on what you’re trying to do. As far as this post goes, I don’t know a way to do it that is compatible with Safari. Here’s a similar question

how do you combine multiple audio files into a single wav file?

I don’t have a working example of this, but you would need to read both of the files into ArrayBuffers using FileReaders and the decodeAudioData function. Then concatenate the ArrayBuffers. See the appendBuffer function in the answer by cwilso here

Hi Russell as i said in the previous post the Var is missing before Compressor=audioctx

Thats why i think people are getting no sound .

Great stuff mate.

Steve.

Ah good catch again Steve. I would like to point out that the var keyword is not required. It simply adds the aspect of scope (which can be really important) to the variable being declared. In this case, it’s not causing any issues. The silence people are getting is mainly from not using two separate FileReaders. That being said, it was a mistake on my part to omit the var keyword here. Thanks for pointing that out Steve. I went ahead and added it to the code example.

Hello, I am having trouble downloading the blob .wav. I am running this on a react js app. The problem is the href=”blob:http://localhost:3000/“. Where would I find the URL for the blob?

Sean, I’m not very familiar with React. It could very well be that this code doesn’t work inside a React app. You might check that the buffer isn’t empty that you’re passing in this line: return new Blob([buffer], {type: “audio/wav”}); Let me know if you find that this code is in fact incompatible with React.

RK’s code did it for me. Many thanks! I spent many hours trying to build something with the same goal and never could previously get it to work.

Great! I’m glad you got it working.

Hi!

Thank you for this post.

I am actually looking for a solution to export in Wav and being able to download a sound generated by simpletones.js

I made a loop to allow the user to make a melody. (So a suite of several tones)

You can see hear:

Thegeekmaestro.fr/app and click on the microtone app.

Thank you for your help and advices if you can.

Best

Alexandre

Hi, Thanks for nice piece of code.

My question is the wave file generated from the code is too large any idea how we can reduce the size ?

Too large for what? You may need to break it into smaller chunks.

I am sending recorded wave files from MediaRecorder to s3 server, when I set numberOfChannels to 2 the 10 sec recorded audio wave file size is upto 2mb by reducing the numberOfChannels to 1 size came down to half but thats still not enough for me.

I was wondering if there is also any way to control the Bit rate would that help and how I can achieve this any idea?

I’m not sure what your size limit is, or if you’re just concerned about speed of file transfer over the network, but you could try compressing the audio file before you send it. Convert it to MP3 or something like that. I was planning to do a tutorial on this process, but I haven’t gotten around to it.

This code does not involve streaming, so bit rate is not a consideration here. You could probably reduce the bit depth to achieve smaller file size, but I wouldn’t recommend that. I would try compressing the file.

Thanks for this great example! It helped me very much!

I just used it to mix some seconds of white noise from my original WAV file to a new file.

At first moment I get a silent WAV file too, like some people said, internally plenty of zeros.

A solved it adding a sentence “soundSource.start()” just before “offlineAudioCtx.startRendering()…” and it worked.

Thanks!